X-Rays in Diagnostic Medicine

William Conrad Roentgen, a German physicist, discovered x-rays in November 1895. X-rays are a form of electromagnetic radiation, ranging in wavelength from approximately 10-9 to 10-12 meters, or 0.01 to 100 angstrom (A). Unlike visible light, x-rays have sufficient energy to penetrate human tissues, and it is this property that makes them so useful in diagnostic imaging. Roentgen discovered x-rays while investigating discharge from an induction coil through a partially evacuated glass tube.

The tube was covered in black paper and the room was in darkness, yet he noticed that a fluorescent screen in the room became illuminated. Other scientists had encountered similar phenomenon while experimenting with electrical discharges through gas filled tubes. Sir William Crookes, a leading scientist in the field, found photographic plates in his laboratory fogged but did not realize it was as a result of his experiments, and he returned them to the manufacturer. Roentgen, however, was the first to realize the significance of the effect, and he received the first Nobel Prize for physics in 1901.

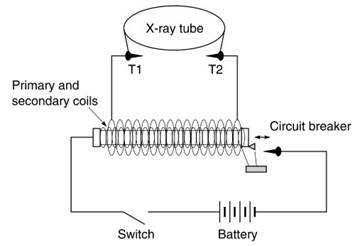

X-rays, as opposed to visible light rays, penetrate matter because of their higher energy. This is a result of high-energy electrons generated in the x- ray tube; the energy of electrons incident on the target sets a maximum limit on the energy of the x- rays produced. The incident electrons gain energy from the high electrical potentials across the tubes. Up to the 1920s most x-ray tubes used induction coils (Figure 1).

Figure 1. Induction coil

The inner coil is connected to the battery through a switch and an interrupter device. Once the switch is closed, current flows in the circuit, and the iron core becomes magnetized. The interrupter consists of a screw and sprung switch. The iron head of the sprung switch is attracted by the magnet and disconnects from the contact screw breaking the current path. The core becomes demagnetized, the sprung switch springs back to make contact with the screw, which once more allows current to pass, magnetizing the core. This process repeats at high speed causing a varying current in the inner coil, which induces alternating current of high potential in the secondary coil.

This places a potential across the terminals T1 and T2, which are in parallel with the x-ray tube. The potential is sufficient for a spark to cross the gap between the two terminals. If the terminals are further separated, after a certain point the tube's resistance is less than the resistance between the terminals and the potential is available across the tube. The oldest method of specifying penetrating power of x-rays was by determining the length of the spark gap (the kilovoltage is equal to the equivalent spark gap in air).

The military were among the first to take advantage of x-ray technology to locate bullets and shrapnel in wounded soldiers. Power sources were a particular problem for x-ray systems in the field, however, and solutions ranged from gasoline engine-driven dynamos, to biplane engines in World War I, with even pedal power from a tandem bicycle used in the Sudan in 1898 (with the bike used for charging storage batteries). High- voltage transformers were introduced around 1919 and became the basis of high voltage generators in x-ray tubes.

In gas-filled tubes the potential across the tube causes ionization of the gas and electrons were attracted to the positive side of the tube. When the stream of electrons hit the target (originally the end of the glass tube), heat and x-rays were produced. Most of the energy was converted to heat, which placed a limit on the amount of time x-rays could be produced because the target would melt.

The two most important early improvements in x-ray gas tube design were by Campbell Swinton, who introduced a sheet of platinum as a metal target, and by Herbert Jackson, who used concave cathodes to focus electrons onto a small area of the metal target producing more sharply defined images. The gas tube was unreliable, as x-ray production depended on the variable factor of the gas content. Richardson’s discovery of thermionic emission in 1902 formed the basis for a major advance in x-ray tube design.

W. D. Coolidge of the General Electric Company developed a thermionic x-ray tube (the Coolidge tube) in 1913 that used an almost perfect vacuum. Electrons are boiled off the cathode (thermionic emission) when current passed through the filament circuit. These electrons are accelerated across the tube producing x-rays when they strike the anode target. By 1915 Coolidge had developed rotating anodes, achieving target rotation of 750 revolutions per second, which effectively increased the area of the anode target and increased the anode’s ability to dissipate heat.

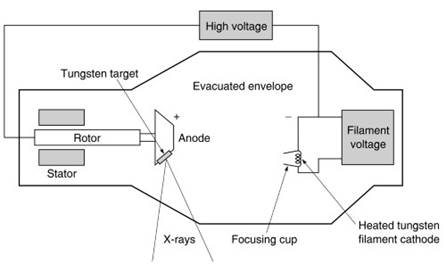

Modern x-ray tubes have most of the design features developed in the first 40 years of research; however, tubes generally have a selection of cathode filaments, anodes rotate at much higher speeds, the target is usually tungsten (which has twice the melting point of platinum), and improved designs for high-voltage generators and regulators have allowed a greater degree of control over x-ray energy (Figure 2).

Figure 2. X-ray tube

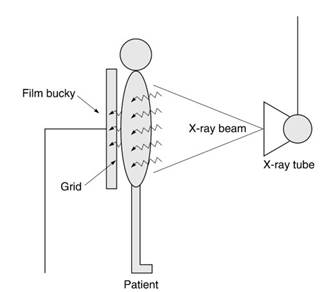

X-rays have a number of properties that allow their detection, and they affect a photographic emulsion in the same way as light. Films are placed behind the patient to capture the emerging x-rays (Figure 3). Screens, which reduce exposure requirements in both dose and time, have been used since the early days of x-rays. When x-rays interact with matter they may be scattered at different angles, and scatter disturbs the correspondence between points in the image and in the patient, resulting in reduced image contrast.

Figure 3. Basic chest x-ray radiography system

Moving grids for scatter rejection were introduced in the 1920s. The grid blocked photons emerging from the patient at oblique angles to the detector. The grid was moved during exposures to prevent the grid lines from appearing on the image. Digital radiographic techniques, developed in the 1980s by the Fuji Corporation, introduced a photo-stimulatible phosphor screen as a primary image receptor. Use of digital detectors for radiography occurred in the 1980s and 1990s, although film remained the dominant detector type.

Another property exploited for the detection of x-rays is that they cause fluorescence in certain materials. By 1896, Thomas Edison had examined 1800 chemicals to detect and compare their x-ray fluorescent properties. His skiascope used a platino-barium cyanide fluoroscopic plate installed in a visor, which was held up to the eyes to allow observation of x-ray fluorescence. Fluoroscopic images are dynamic, with the illumination pattern on the screen responding to changes in the object being imaged.

Fluorescence produced very low levels of illumination, and in many applications the eye had to be dark-adapted before images could be properly viewed. Image intensifiers, which were developed in the 1960s, greatly improved fluoroscopic imaging. These intensifiers convert the fluorescent light into electrons and accelerate them across to an output plate, which amplifies the signal. The output plate, being smaller, produces an additional geometric gain. The output screen is linked to a camera that allows display of dynamic images on a monitor.

Digital technology has revolutionized x-ray imaging. Digital subtraction angiography (DSA), which exploits the use of a contrast agent and digital imaging processing for use in vascular imaging, was developed in the early 1980s. The contrast, a dye with high x-ray attenuation properties, is injected into the blood stream. In DSA, images of a region before and after the dye is injected can be digitally subtracted. The resultant image clearly shows the vein (or area where contrast was injected), while the overlying bone structures, which might inhibit visualization, are subtracted.

In the first few years after the discovery of x- rays, it became apparent that there were hazards associated with their use. Accounts of the early years contain numerous reports of injuries and even the death of radiation workers. In 1904 Beck described three levels of Roentgen ray burns varying from itching symptoms to painful blisters and skin discoloration and ulcers.

In 1915 the Roentgen society in London adopted radiation protection recommendations, and the first dose limits were proposed in 1925. The International Committee on X-ray and Radium Protection (ICXRP, later the ICRP) was formed in 1928. In 1954 the U.S. National Committee on Radiation Protection (NCRP) put forward the concept of ALARA, an acronym for ‘‘as low as reasonably achievable.’’

Because x-rays are a form of ionizing radiation, all examinations must be clinically justified and doses kept ‘‘as low as reasonably achievable.’’ The ALARA concept was stated in the first ICRP publication in 1959. Radiation protection recommendations continued to be reviewed and updated throughout the twentieth century in an effort to optimize safety in the use of diagnostic x-rays.

X-ray technology applications routinely used in hospitals include film screen systems, fluoroscopy systems that can provide dynamic image information, and computed tomography (CT), which provides high-contrast image slices through the patient. The many clinical applications include examination of broken bones, angiography procedures, and identification of tumors, such as in mammography. In interventional procedures, x- ray technology is used to assist radiologists and surgeons in removing blockages in blood vessels and inserting pacemakers.

High-energy x-ray systems may also be used therapeutically to provide lethal radiation doses to cancerous tumors. Future developments are likely to concentrate on digital systems, and many hospitals are implementing wholly digital departments. Picture Archive Communications Systems (PACS) allow images to be stored centrally and transmitted to display devices around the hospital. Instead of looking at x-ray films on light boxes, clinicians examine films displayed on high-quality monitors.

Date added: 2023-10-26; views: 654;