Content-based Web Video Retrieval

Content-based video retrieval, as a challenging yet promising approach to video search, has attracted the attentions of many researchers during the last decades. Many methods are proposed, and some systems are also realized.

Currently, with the advent of new video techniques and ever developing network technology, video-sharing websites and video-related Internet-based services are becoming more and more important on the Web. As a result, many videos are generated or uploaded onto the Web every day. This situation brings new challenges and difficulties to content-based video retrieval.

Large-Scale Web Video Categorization. Video categorization is used to classify videos into some predefined categories. Traditionally, most methods of video categorization categorize videos into certain typical genres, such as movies, commercials, cartoons, and so on. However, for web videos, the categories include not only genres, but also semantic concepts, such as autos, animals, and so on.

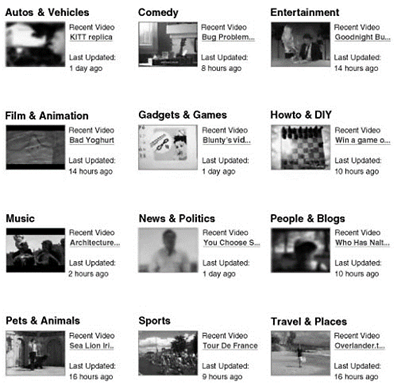

Figure 5 shows the category definition excerpted from YouTube (YouTube, LLC, Mountain View, CA).

Using multimodality is a promising approach to categorize web videos. Low-level and semantic features from different information sources, such as visual, audio, and the surrounding text in the web page affiliated with the video can be applied. A classifier can be trained based on one type of features, and then improved results can be obtained by fusing all the output from these classifiers.

Figure 5. The categories excerpted from YouTube

Content-Based Search Result Re-Ranking. For video retrieval, many information sources can contribute to the final ranking, including visual, audio, concept detection, and text (ASR, OCR, etc.). Combining the evidence from the various modalities often improves the performance of content-based search significantly.

One intuitive solution to combine such modalities is to fuse the ranking scores outputted by the search engines using each modality. However, simply fusing them will lead to inaccuracy and maybe propagate the noise. Some researchers propose to train the fusion model separately for different query classes. Some efforts focus on how to classify the queries automatically, which still remains an open problem.

In the experiments of video retrieval, text information often leads to better performance than other individual modalities. Hence, some methods emerge that first use text information to rank the videos and then use other evidences to adjust the order of videos in the rank list. It is called reranking. Some researchers used the technique called pseudo relevance feedback to rerank the video search results.

They assume that the top and bottom fractions of the rank list can be regarded as the positive and negative samples with significant confidences, and then they use these samples to train a model to rerank the search result.

However, such assumption cannot be satisfied in most situations because the state-of-the-art performance of text- based video search is low. One proposed two methods to rerank the video search result from different viewpoints. One is information theory driven reranking, called IB-reranking. It assumes the videos that have many visually similar videos with high text relevance should be ranked top in the search result. The other is context ranking, which formulate the reranking problem into a random work framework.

Content-based search result reranking, although attracted the attentions from the researchers and is applied successfully to TRECVID video search task, many issues need to be solved. The most important is deploying it into real-world video search system to observe whether it can improve the performance.

Content-Based Search Result Presentation. Search result presentation is a critical component for web video retrieval as it is the direct interface to the users. A good search result presentation makes users browse the videos effectively and efficiently.

Typical video presentation forms include static thumbnail, motion thumbnail, and multiple thumbnails.

Static Thumbnail: an image chosen from the video frame sequence, that can represent the video content briefly.

Motion Thumbnail: a video clip composed of the smaller clips selected from the original video sequence based on some criteria to maximize the information preserved in the thumbnail. Motion thumbnail generation uses the same or similar technology for video summarization.

Multiple Thumbnails: selecting multiple representative frames from the video sequence and show them to the user so that the user can quickly recognize the meaning of the video. The number of thumbnails can be determined by the complexity of the video content or can be scalable (that is, can be changed accordingto users’ request). Multiple thumbnails are also generated by video summarization algorithms.

Content-Based Duplicate Detection. As the techniques and tools for copying, recompressing, and transporting videos can be acquired easily, the same videos, although with different formats, frame rates, frame sizes, or even some editing, so-called duplicates, are distributed over the Internet. In some video services, such as video search and video recommendation, duplicated videos that appear in the results will degrade the user experience.

Therefore, duplicate detection is becoming an ever important problem, especially when facing web videos. In addition, integrating the textual information of video duplicates also help build a better video index.

Video duplicate detection is similar to QBE-based video search in that they are both to find similar videos, with the difference in similarity measure between videos. For video search, the returned videos should be the videos semantically related to or matching the query video, whereas for duplicate detection, only the duplicates should be returned. Three components for duplicate detection are video signature generation, similarity computation, and search strategy.

Video signature is a compact and effective video representation. Because videos are a temporal image sequence, a sequence of the feature vectors of the frames sampled from the video can be viewed as the video signature. Also, shot detection can be processed first and then the feature vector sequence extracted from the shot key frames can be regarded as video signature. In addition, the statistical information of the frames can be employed as video signature.

The similarity measure depends on the video signature algorithm, which includes L1 norm, L2 norm, and so on. The high-dimensional indexing techniques can be used to speed up the search for duplicate detection, and some heuristic methods that use the characteristics of the specific video signatures can also be employed.

The difficulties of video duplicate detection lie in that: (1) It requires low computational cost for video signature generation as well as the duplicate search so as to be applied in large-scale video set and (2) the forms of the video duplicates are diverse so that it requires an effective and robust video signature and similarity measure.

A closely related topic to duplicate detection is copy detection, which is a more general algorithm to detect duplicates. Technologies for copy detection often are also called video signatures. Different from duplicate detection, which only cares about videos of the same or close durations, copy detection also needs to detect whether a short clip exists in a longer video, or even whether two videos share one or more segments. Copy detection is generally used against pirating.

Web Video Recommendation. Helping users to find their desired information is a central problem for research and industry. For video information, basically three approaches are dedicated to this problem: video categorization, video search, and video recommendation. Many video-sharing websites, like YouTube, MSN Soapbox, and even most video search websites, like Google (Mountain View, CA) and Microsoft Live Search (Microsoft, Redmond, WA), have provided video recommendation service.

Video recommendation, in some sense, is related to video search in that they both find the videos related to the query (for video search) or a certain video (for video recommendation). However, they are also different in two aspects.

First, video search finds the videos mostly matching the query, whereas video recommendation is to find the videos related to the source video. For example, ‘‘Apple’’ video is not a good search result for a query ‘‘Orange’’, whereas it may be a good recommendation for an ‘‘Orange’’ video. Second, the user profile is an important component for video recommendation.

Most recommendation systems use the user profiles to provide recommended video lists to the specific user. The user’s click and selection of videos and ratings to some items can be parts of user profile, besides the user location and other user information. As well, most video recommendation systems use the text information (such as the name of actors, actresses, directors for the movie, and the surrounding text for the web video) to generate the recommendation list.

Content-based video analysis techniques can provide important evidence besides user profile and text to improve the recommendation. With such information, video recommendation can be formulated into a multi-modality analysis framework.

Date added: 2024-06-15; views: 486;