Audiology, Testing. Subjective Hearing Testing

The Archives of Paediatrics of 1896 reported that 30 percent of the large number of children with poor educational and mental development in Germany and America had deficient hearing power. At the close of the nineteenth century, methods for measuring hearing included observing the patient’s ability to detect sounds of a ticking watch, Galton’s whistle, the spoken voice, and tuning forks.

Half a century earlier in Germany, Hermann Ludwig Ferdinand von Helmholtz investigated hearing physiology and Adolf Rinne used tuning forks to diagnose types of deafness. Galton’s whistle emitted accurate variations in tones without loudness control and allowed exploration of hearing ability; Adam Politzer’s acoumeter produced loud controlled clicks for detecting severe deafness. These principles of intensity and frequency measurement have become integral features of modern audiometers.

Induction coil technology used in Alexander Graham Bell’s telephone (1877) formed the basis of many sound generators. In 1914, A. Stefanini (Italy) produced an alternating current generator with a complete range of tones, and five years later Dr. Lee W. Dean and C. C. Bunch of the U.S. produced a clinical ‘‘pitch range audiometer.’’ Prerecorded speech testing using the Edison phonograph was developed in the first decade of the twentieth century and was employed in mass testing of school children in 1927.

World War I brought about major advances in electro-acoustics in the U.S., Britain, and Germany with a revolution in audiometer construction. In 1922 the Western Electric Company (U.S.) manufactured the 1A Fowler machine using the new thermionic vacuum tubes to deliver a full range of pure tones and intensities. Later models restricted the frequencies to the speech range, reduced their size, and added bone conduction.

In 1933 the Hearing Tests Committee in the U.K. debated the problems of quantifying hearing loss with tuning forks because their frequency and amplitude depended on the mode of use. Despite the availability of electric audiometers at the time, standardization of tuning fork manufacture and strict method of use was recommended, along with the introduction of graphic documentation audiography.

Concern abroad about a standard of intensity of sound, or loudness, led to the American Standards Association adopting the bel, named after Alexander G. Bell and already used as a measurement in the acoustic industry. The decibel (dB) was adopted as a unit of measurement of the relative intensity of sounds in the U.S., Britain, and then Germany. International standards were established in 1964.

Subjective Hearing Testing. Several tests for hearing involve the subject’s response to types of sounds in a controlled setting. Tests are designed to detect conductive loss, which results from obstruction and disease of the outer or middle ear, and sensorineural (perceptive) loss, caused by lesions of the inner ear (cochlea) or auditory nerve (nerve of hearing) and its connections to the brain stem.

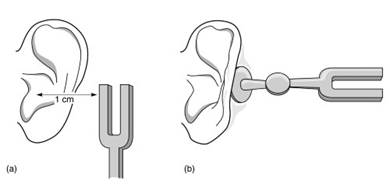

Tuning fork tests compare responses to sound transmitted through the normal route of air conduction (AC) as well as bone conduction (BC) through the skull bone to the cochlear shell. Conductive loss reduces air conduction, and perceptive disorders reduce bone conduction. The Rinne test (Figure 4) is positive when AC>BC and negative when AC<BC.

Figure 4. Rinne test using a tuning fork: (a) air conduction; (b) bone conduction. [Source: Ludman H. and Kemp, D. T. Basic Acoustics and Hearing Tests, in Diseases of the Ear, 6th edn., Ludman, H. and Wright, T., Eds. Edward Arnold, London, 1998. Reprinted by permission of Hodder Arnold.]

Pure tone audiometry (PTA) is a subjective measurement of AC/BC thresholds using headphones. Responses are displayed graphically. Frequencies cover 250-8,000 Hz, the range for speech understanding, and are plotted against intensities of -10 to 120 decibels, hearing level (dBHL). The internationally agreed normal threshold for hearing is 0 dB and is calibrated from healthy young adults. Classification of the degree of hearing loss is based on pure tone audiometry of each ear.

Speech audiometry tests the subject’s response to phonetically balanced words at different intensities. The test is used to evaluate hearing discrimination for fitting hearing aids and for cochlear implantation.

Behavioral Testing. Around 1900, neurophysiology gained importance with the work of Charles Scott Sherrington in the U.K. and Ivan Petrovich Pavlov in Russia who were exploring innate and conditioned reflexes. Over the next few decades, interest spread beyond Europe to Japan and the U.S. Clinicians began to use these subjective behavioral responses along with various noise makers to identify hearing loss in infants and children.

The year 1944 was an important landmark for the introduction of a developmental distraction test researched by Irene R. Ewing and Alexander W. G. Ewing at Manchester University. This first standard preschool screen became a national procedure in Britain in 1950 and was later adopted in Europe, Israel, and the U.S. Observations of head turns to meaningful low- and high-frequency sounds in infants about 8 months old were made with one person distracting and another testing. This screening method, although still in use, failed to detect moderate deafness.

Automated behavioral screening to detect body movements in response to sounds represented a transition period in the mid-1970s. Two tests, the American Crib-o-gram (1973) and Bennett’s Auditory Cradle (London 1979), both proved inefficient.

Objective Testing. In 1924 German psychiatrist Dr. Hans Berger recorded brain waves with surface cranial electrodes. Electroencephalography (EEG) was used fifteen years later by P. A. Davis in the U.S. to record electrical potentials from the brain with sound stimulation. In the journal Science in 1930, Ernest Weaver and Charles Bray, also in the U.S., confirmed the production of electrical potentials in the inner ear to sound stimulation in cats.

In New York in 1960, action potentials were recorded with electrode placement through a human middle ear directly onto the inner ear. Electrocochleography (ECoG) was used for diagnosis but required anesthesia, especially for children, and was replaced by noninvasive auditory brainstem response audiometry (ABR).

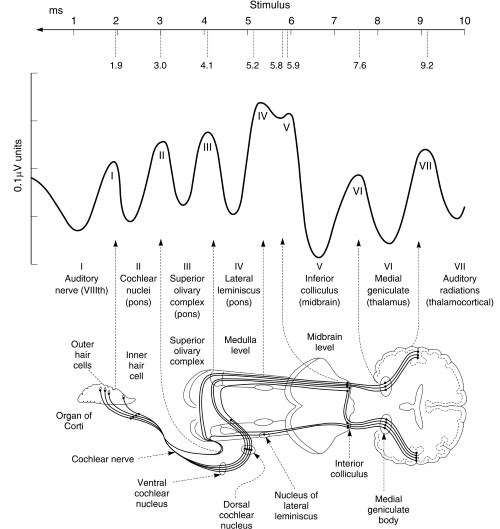

Signal-averaging computerization in the 1950s enhanced the recordings of synchronous neural discharges generated within the auditory nerve pathway with EEG on repetitive acoustic stimulation. Research in the later 1960s in Israel and the U.S. identified amplified electrical potentials more easily in cats and humans with improved computerization to extract background noises. Peaked waves I to VII represented various component activities along the auditory route, wave V being the most diagnostic (Figure 5).

Figure 5. Normal brain stem responses and anatomical correlates of waves I to VII in auditory brain stem response (ABR). [Source: Ludman H. and Kemp, D. T. Basic Acoustics and Hearing Tests, in Diseases of the Ear, 6th edn., Ludman, H. and Wright, T., Eds. Edward Arnold, London, 1998. Reprinted by permission of Hodder Arnold.]

ABR was thoroughly investigated in the U.S. and was validated in 1974 for testing high-risk infants. An automated model, automated auditory brain stem response (AABR) was manufactured in the U.S. during the next ten years and proved to be an affordable, noninvasive screening tool for babies. The device used short broadband clicks to elicit responses in the 2000 to 4000 Hz speech recognition range and compared the results with a template algorithm of age-related normal responses.

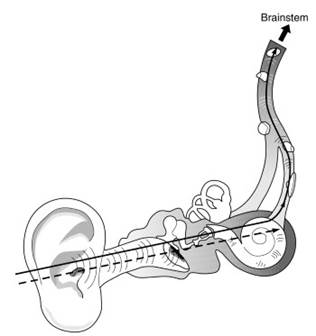

In 1978 David Kemp in London researched earlier reports of sound reemissions from the ear and redefined outer hair-cell function (inner ear workings). These sensors ‘‘amplified’’ and converted the mechanical sound waves traveling along the cochlear fluid duct, which sharpened and strengthened each individual sound to stimulate a specific anatomical site along the duct.

Figure 6. Screeners use automated auditory brain stem response (AABR) technology to test the entire hearing pathway from the ear to the brain stem. Otoacoustic emissions (OAE) test hearing from the outer ear to the cochlea only

The excess energy produced by this process was reflected as ‘‘echoes’’ and could be collected with Kemp’s miniaturized ear canal microphone. These otoacoustic emissions (OAE) were analyzed by computerization according to specific frequency responses, thus providing an extremely sensitive apparatus for detecting cochlea hair-cell damage (Figure 6). After trials in France it was widely accepted as an efficient and safe testing tool.

Date added: 2023-10-03; views: 690;