The Model. The Complexity-scope Exchange

The model itself consists of four main parts. While the core proposition has been presented above, the detailed description follows. The model can be briefly explained as follows:

1. The question that is considered here, is to what extent Alice should be confident that a course of actions within a transaction will be beneficial for her.

2. While our primary interest is in Alice's relationship with Bob, Alice must recognise that there are several entities that the success is contingent upon.

3. Alice assumes that certain enablers are in place: identity, similarity, honesty and availability.

4. Alice considers four kinds of entities that may influence the outcome: world, domains, agents and transactions.

5. Depending on her affordable complexity, Alice includes in her transactional horizon a selection of entities. Adding each entity increases the complexity, but may decrease the uncertainty of her assessment.

6. Having decided on entities, Alice buildis the model of confidence, stressing the relationship between those entities.

7. Alice uses available evidence to assess confidence in each entity, separately assessing trust and control.

8. On that basis, Alice assesses her confidence in the horizon of a transaction.

Entities. Alice structures her world into abstract entities of certain types. It is the defining element of an entity that Alice is willing to express her confidence in such an entity, e.g. she can be confident about Bob, about doctors, about the world or about recent payment. Even though the exact structuring depends on Alice, the proposition is that Alice is dealing with entities of four different kinds:

1. Agent is the smallest entity that is attributable with intentions and free will. Examples of agents are individuals (e.g. Bob), computers, companies, governments, shops, doctors, software, etc. Agents can be both the subject of evidence and sources of evidence. The abstraction of agents captures the semi-permanent characteristics of them. Confidence in agents is of major to Alice concern as agents are perceived to be highly independent in their intentions.

2. Domain is the collective abstraction of a group of agents that share certain common characteristics, e.g. all agents that are doctors, all agents that engage in commerce, all computers, etc. Each domain allows Alice to express confidence about a particular behavioural profile of agents (e.g. 'I am confident in doctors') and replaces the missing knowledge about a particular agent if such an agent is believed to share the characteristics of a domain. One agent may belong to several domains and one transaction may include several domains as well (e.g. 'electronic commerce' and 'governed by EU law').

3. World. This top-level abstraction encapsulates all agents and is used as a default to substitute for unknown information of any kind. The confidence in the world is often referred to as an 'attitude' or 'propensity to trust' and can be captured by statements such as 'people are good', 'the world is helpful', etc.

Transaction. This entity introduces the temporal aspect as it encapsulates transient elements that are associated with an individual transaction. Specifically, the behaviour of various agents at the time of transaction, the value of a transaction, particularities of transaction, etc. are captured here. Note that transaction-entity differs from the horizon of a transaction.

The ability to generalise and abstract confidence allows one to build confidence in more abstract entities on the basis of experience with more specific ones. Specifically, evidence relevant to transaction (e.g. positive or negative outcome of such transaction) can gradually accumulate as confidence in the world.

The opposite is not true - confidence in the world (or in the domain) cannot be directly used to build confidence about an agent. However, such confidence substitutes for and complements the evidence of an individual, working as a default, thus decreasing the need for confidence in individual agents.

The Complexity-scope Exchange. Alice's choices regarding her horizon are restricted by her limited disposable complexity. The amount of such complexity can depend on several factors, including physical and psychological predispositions, current cognitive capabilities, tiredness, etc. For example, young, healthy, educated Alice may be willing to accept much more complexity then the old and sick one. Similarly Alice-organisation may be willing to deal with much higher complexity than Alice-individual.

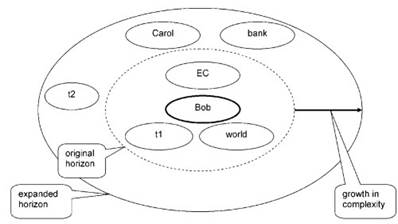

Alice's disposable complexity can be traded to improve the assessment of the confidence by expanding Alice's horizon of the transaction. Specifically, such expansion may include additional entities. Figure 2.1. shows an example of the exchange process. Having only limited affordable complexity, Alice has initially decided to include in the scope of her transaction only four entities (note that this is the reasonable minimum): world, Bob, domain (electronic commerce, EC) and particulars of this transaction (t1).

Figure 2.1. The complexity-horizon exchange

Having realised that she has some complexity to spare, she decided to extend the scope and include more entities: Carol (who e.g. knows Bob), her bank (that can guarantee the transaction), her past experience with Bob (t2), etc. Note that there are likely several entities that are left behind the horizon.

We do not require from Alice any attempt to reach any particular level of confidence. Alice might have decided on a certain minimum level of confidence and may refuse to progress if such a level is not attainable. However, Alice may be also in a position where she is able to make a decision that she is most confident with, regardless of the actual level of confidence. Whatever her situation is, the only thing she can trade her complexity for is additional information that comes from extending the horizon of a transaction.

Alice is free to include whatever entity she likes. However, if her choice is not relevant to the transaction, she is wasting some of her affordable complexity and is not gaining in the quality of assessment. Alice can also artificially increase or decrease her confidence in Bob by selectively extending the scope of the transaction - something that can make her happy but not necessary wise.

What should drive Alice when she is choosing her horizon is the premise of a reduction of uncertainty. Even though no entity can provide an absolutely certain assessment of Bob, each can provide an assessment at its level of certainty. If Alice wants to proceed wisely and to get the best assessment, she should pick those entities that can help her reach the lowest possible uncertainty (or the highest possible certainty).

When choosing an entity, Alice bears in mind that trusting Bob is usually cheaper exactly because Alice does not have to deal with instruments that control Bob (in addition to Bob), but only deals with Bob. If trust can be further assessed on the basis of first-hand experience (so that Alice can provide all the necessary evidence), this option looks lean compared to anything else.

However, this is not always true. If Alice can trust Carol and Carol promises to control Bob, then controlling Bob may be a cheaper option. For example, if Alice has little experience with Bob but Carol is her lifelong friend who knows how to control people, then Alice may find trusting Carol and controlling Bob both cheaper and more efficient.

The Assessment of Confidence. Let us now consider how confidence can be assessed, i.e. how Alice can determine her level of confidence. The proposition is that within the horizon of the transaction, Alice can determine her overall confidence by processing her level of confidence from all the different entities: world, domain, agents and transactions, according to her perceived relationship between them.

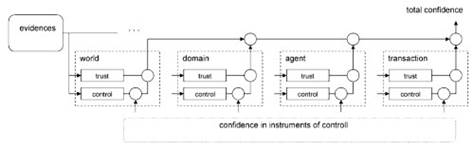

Figure 2.2. Confidence across different entities

Figure 2.2. shows the simplified relationship between confidences coming from different types of entities, assuming that there are only four entities within the horizon and that there are no relationships between them.

Two Paths. Sources of Evidence

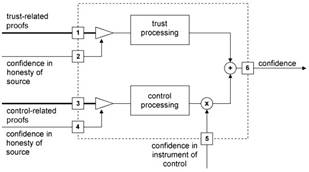

The proposition is that there are two conceptual assessment paths: one for trust and another for control. Evidence of trust is delivered to trust processing that assesses it and generates the level of trust associated with it. Evidence of control is delivered to control processing, but the outcome from the control processing is further influenced by the confidence in the instrument of control (Figure 2.2 and Figure 2.3).

Alice has two choices: she can either directly use trust or she can use control with the associated confidence that she has in the efficiency of such instruments, provided that those instruments are included in her horizon.

Figure 2.3. Basic building block

In either case, trust is the prerequisite of action: either Alice trusts Bob or Alice is confident (which recursively requires trust) in someone (something) else to control Bob - and she must include such an entity within the scope of the transaction. In fact, trust is the only irreducible element of the model: at a certain level, control requires trust.

Sources of Evidence. Trust and control requires evidence. There is an understanding that trust cannot appear in the absence of evidence - the situation that is referred to typically as faith. However, neither trust nor control require the complete set of evidence [Arion1994].

Evidence is not judged identically. The significance of each evidence is attenuated depending on the perceived honesty of the source of such evidence. First-hand evidence can be also weighted by the confidence that the agent has in its own honesty. Honesty of the agent is one of the enablers and will be discussed later. It is worth noting that the confidence in the agent's honesty can be achieved by the recursive usage of the model, within a different area of discourse.

Evidence of trust and control are different, even though they may come from the same source. There are three classes of evidence related to trust: continuity, competence and motivation. There are three classes of evidence regarding control: influence, knowledge and reassurance.

The Building Block. The graphical representation of the confidence building block (Figure 2.3.) can be used to further illustrate the proposition. This representation does not directly capture the recursive nature of the evaluation.

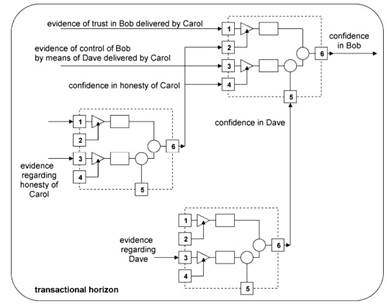

Figure 2.4. Building blocks within a horizon

In order to graphically represent the recursive and iterative nature of the confidence, within the horizon of a transaction, Figure 2.4 demonstrates how several building blocks can be combined to develop confidence in more complex (and more realistic) cases. Here, within her horizon, Alice must assess her confidence in three entities: Bob, Carol and Dave. While Bob seems to be critical to success, Carol is the main source of evidence about him and Dave is an instrument to control him.

Assessment as an Optimisation Process. The assessment of confidence can be also modelled as an optimisation process. When choosing entities for the inclusion in her horizon, Alice is trading complexity for the reduction of uncertainty. Every entity comes with a cost that determines how much of her complexity Alice must spend on maintaining such an entity. If Alice selects herself, the cost may be negligible, Bob may come cheaply but e.g. Dave may require significant effort to make him work for her.

Every entity also comes with an offer to increase Alice's certainty about her transitional confidence (possibly with regard to another entity). The ability to increase certainty may come e.g. from experience, the proven ability to deal with a situation, etc. While maintaining the spent complexity within the limit of her disposable complexity, Alice must maximise the certainty that she receives.

Further, every entity offers its own assessment of confidence. Depending on the position on a horizon, such confidence may be about Bob, about controlling Bob, about being honest, etc. Even though Alice may want confidence, actual values that are offered by entities should not motivate her decisions regarding their inclusion within a scope. It is only the maximisation of certainty that she should want. She is therefore trying to optimise her decision in such a way that for the minimum amount of disposable complexity she will gain the maximum possible certainty about the level of confidence.

As the process is NP-complete, Alice is not likely to actually proceed exactly according to the description provided above and come up with the best solution. From this perspective, the 'true' level of confidence is not available to Alice. Alice is likely either to stay with simplistic and sub-optimal solutions or possibly apply simple heuristics to try to identify potential improvements.

Date added: 2023-09-23; views: 602;