Recursion. Enablers. Similarity. Honesty. Identify

Recursion is an attractive property of this model: even though the basic building block is relatively simple, it can be replicated and inter-connected to model assessments in complex transactions. The recursive nature of the model leads in two different directions. First, the confidence in honesty is used within the model to qualify evidence. We can see that it leads us to yet another level of confidence assessment where the context is not about a particular service but about the ability to tell the truth. This will be discussed later as one of the key enablers.

Second, the confidence in instruments of control is used to qualify confidence that comes through the control path (see also e.g. [Tan2000]). The reasoning behind recursion is very simple - if we want to use certain instrument of control, then we should better be sure that such an instrument works - that it will respond to our needs and that it will work for us.

We can combine here trust with control, exactly in the same way as in the main building block of the model. Specifically, we can control instruments of control - but this requires yet another recursive layer of trust and control. There is an interesting question of how deep should we go. If we employ the watcher to gain control, who is watching the watcher? Who is watching those who are watching the watcher? - etc. Even though we can expand the model indefinitely (within limits set by our resources), this does not seem reasonable, as each layer of control adds complexity.

This recursive iteration may be terminated in several ways. The simplest one is to end up with an element of control that is fully trusted. For example, trusted computing [Pearson2002] conveniently assumes that certain parts of hardware can be trusted without being controlled. Other solutions may loop the chain of control back to us - following the assumption that we can trust ourselves. Elections in democratic societies serve this purpose, providing the control loop that should reaffirm our confidence in the government.

Enablers. Alice's desire is to assess her confidence in Bob within a horizon of the transaction and with regard to a given subject - she wants Bob to behave in a particular way, to help her. Her reasoning about Bob is therefore focused on the specific subject and resides within what we can call the 'frame of discourse', the potentially rich set of entities, evidence, rules, states, etc. associated with a subject.

While concentrating on her main subject, Alice can rely on several supporting processes that facilitate her ability to reason about Bob. She needs sufficient amount of evidence, she would like to understand whether such evidence are true, whether Bob actually exists or whether he is a person. Such enablers indirectly affect the way Alice is performing her assessment of confidence. If one or more of enablers are not satisfied, Alice loses her ability to determine confidence - the process will fail.

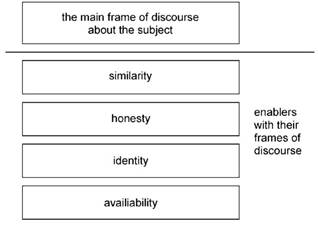

The proposition is that there are four enablers - similarity, honesty, identity and availability - that form four separate frames of discourse below the one defined by the main subject. Those frames can be viewed as stacked one upon the other (in the order presented in Figure 2.5). Each frame is concerned with a separate question, and - interestingly - all those questions refer to Alice's confidence in certain properties of entities. Similarity verifies how confident Alice is that Bob's intentions can be correctly assessed, honesty addresses the question about Alice's confidence in Bob's trustworthiness (which is a prerequisite to accepting Bob as a source of evidence), etc.

Figure 2.5. Frames of discourse with enablers

The honesty enabler is directly referred to in the model in a form of a conftruth factor that influences the weighting of evidences. Other enablers are not directly visible, but all are necessary for the model to operate. For example, similarity is implied by the model: Alice can trust only those who can have intentions (like herself) - so that if Bob is dissimilar, then trust cannot be extended to him (while control can).

When Alice is reasoning about enablers, she can use the same model that has been presented here, i.e. she can use trust and control to resolve whether she is confident about the subject of the given frame of discourse. This may seems like an impossible task, as each reasoning about confidence requires Alice to reason about confidence! However, there is a way out.

Alice should reason about enablers in a bottom-up manner: if she is not confident about evidence, then she should withhold her opinion about identity, etc. Availability of evidence is therefore the basis of confidence about identity (in accordance e.g. with [Giddens1991]), identity allows evidence to be gathered about honesty, etc. However, practice shows that this is not always the case: shared identity can be used to demonstrate similarity while expectations of similarity (e.g. similar background or interest) support the perception of honesty [Dunn2005].

If Alice is not careful, she may easily end up in a self-reinforcing situations, with a positive feedback loop: if Bob is similar, then he is believed to be honest so that because he is honest, Alice will accept from him evidence about his similarity, thus indirectly driving her perception of his honesty even further.

Alice is expected to keep frames of discourse separate, without logical loops, so that she never uses the potential outcome of the reasoning as the input to the same reasoning, whether directly or indirectly. For example, if reasoning about her confidence in the main frame (  ), she may be considering also her confidence in Carol's honesty (

), she may be considering also her confidence in Carol's honesty (  ) and her confidence in Dave's identity (

) and her confidence in Dave's identity (  ), but not her confidence in Bob's similarity (

), but not her confidence in Bob's similarity (  ). She must be confident that Bob is similar (or dissimilar to a known extent) prior to thinking of him helping her. For example, if Bob is a dog, Alice cannot expect him to help her with her computer (but she may expect help in tracing a lost ball).

). She must be confident that Bob is similar (or dissimilar to a known extent) prior to thinking of him helping her. For example, if Bob is a dog, Alice cannot expect him to help her with her computer (but she may expect help in tracing a lost ball).

The foundation and beginning of reasoning should be Alice herself. She must be confident that she knows enough about herself, that she knows herself, that she understands herself and that she is honest with herself before thinking about others. If, for example, she does not understand herself, she may have problems establishing her confidence in Bob's similarity - she may not have anything to compare Bob to.

If she is not honest with herself, she may be willing to lie to herself about facts, making evidence useless. Starting with herself, Alice can gradually establish her confidence in others using her own first-hand experience and later also opinions provided by others - but then she should first establish her confidence in their honesty. This calls for the stepwise approach, driven by introduction, exploration and incremental widening of the group that Alice is confident about.

It is also possible to let Alice resolve all enablers simultaneously without any reference or link to herself, only on the basis of observations, e.g. allowing Alice to interact with a group of strangers. On the ground of logic alone (e.g. while resolved only on the level of honesty), this situation may end up in an unsolvable paradox: Alice will not be able to determine who is telling the truth. However, if she is resolving the problem on all levels at the same time, she may decide on the basis of greater availability or perceived similarity [Liu2004]. Similarly, the perception of strong identity in inter-personal contacts may increase the perception of honesty [Goffee2005].

The concept of separate layers of discourse is presented e.g. in [Huang2006], with two layers: one that refers to the main frame and another that refers to the frame of truth (honesty). Several interesting social phenomena are related to enablers. Gossip (that seems to stabilise society and contribute to 'social grooming' [Prietula2001]), the perception of identity and honesty, empathy [Feng2004] (that contributes to the perception of similarity) and emotions [Noteboom2005] (that facilitate experimentation, affects risk-taking and general accessibility) rightfully belong here.

Similarity. The main problem that is addressed by similarity is whether Bob is capable of intentions (whether Bob is an intentional agent) and whether his perception of norms, obligations, intentions and communication agrees with Alice's. Without discussing inter-cultural problems [Kramer2004], [Hofstede2006], even the simple act of differentiation between Bob-person and Bob-company has a significant impact on how Alice feels towards Bob and whether she can develop confidence in Bob.

The problem of similarity also applies to relationships. For example, (e.g. [Fitness2001]) in the communal relationship, Alice and Bob are expected to care about each other's long-term welfare while in the exchange relationship they are bound only for a duration of exchange. If Alice and Bob have dissimilar perception of their relationship, the formation of confidence may be problematic and the destruction of the relationship is likely.

Certain clues that allow Alice to determine whether Bob is a person are of value in creating trust [Riegelsberger2003], even if they do not directly provide information about trust itself. Empathy, the ability to accurately predict others' feelings seems to contribute to trust [Feng2004] by attenuating the perception of similarity.

The simple act of Bob sending his picture to Alice by e-mail increases her willingness to develop the relationship, potentially towards trust, by the process of re-embedding [Giddens1988]. Note, however, that the fact that Alice is good at dealing with people face-to-face may actually prevent her from developing trust on-line [Feng2004], as the similarity is perceived differently in direct contacts and through digital media.

The role of the similarity enabler is to provide the estimate of a 'semantic gap' [Abdul- Rahman2005] that can potentially exist between the way Alice and Bob understand and interpret evidence. The larger the gap, the harder it is to build trust, as evidence may be misleading or inconclusive, so that evidence from similar agents should bear more weight [Lopes2006].

Similarity and the semantic gap are not discussed in the model - we conveniently assume that all agents are similar to Alice in their reliance on reasoning, social protocols, etc. (which is a very reasonable assumption for e.g. a set of autonomous agents, but may be problematic for a multi-cultural society). If there is any concern about the semantic gap, it may be captured in the lower value in Alice's confidence in truth.

Honesty. Alice's confidence in others' honesty is strongly and visibly present throughout the model in a form of weighting element for all evidence. The particular frame of discourse focuses on the question of truth:  . The role of the honesty enabler is to allow Alice to accept opinions that come from others, in the form of a qualified reliance on information [Gerck2002].

. The role of the honesty enabler is to allow Alice to accept opinions that come from others, in the form of a qualified reliance on information [Gerck2002].

The ability to tell the truth is the core competence of agents and it seems that no confidence can be established if we do not believe that the truth has been disclosed. Unfortunately, the construct of truth is also vague and encompasses several concepts. We may assume, for the purpose of the model, that by 'truth' we understand here information that is timely, correct and complete to the best understanding of the agent that discloses such information.

This factors out the concept of objective truth but factors in the equally complicated concept of subjective truth. However, we cannot ask for more as the agent can hardly achieve an absolute reassurance about information it discloses.

Confidence in truth may be quite hard to establish. In digital systems, confidence in truth can sometimes be assumed a priori, without any proof. For example, when the mobile operator is issuing SIM, it is confident that the card (to be exact: any device that responds correctly to the cryptographic challenge) reports what is true (e.g. the content of a phone book) to the best of its understanding. This assumption can be challenged in principle (as the card cannot even reasonably check what physical form it has), but it holds in practice.

If Alice assumes that certain entities may not tell the truth, she may want to identify and exclude 'liars' from her horizon. This, however, leads to challenges associated with the construct of truth. Specifically, the ability to differentiate between the unintentional liar and the intentional one or between the benevolent messenger of unpopular news and the dishonest forwarder of popular ones may prove to be hard. For example, the 'liar detection' system [Fernandes2004] that is based on the coherence of observations punishes the first entity that brings unpopular news, effectively removing incentives to act as a whistleblower.

Identify. The identity enabler allows Alice to consolidate evidence that has been collected at different moments of time - to correlate evidence about Bob. Within the model, identity is expected to be reasonably permanent - one of simplifications of the model. Bob is always Bob, only Bob and everybody knows that he is Bob.

However, while discussing the relationship that is e.g. managed over the Internet (e.g. [Turkle1997]) we can see the erosion of this simplistic assumption as identities proliferate and confidence diminishes. While identity is central to the notion of confidence, assuring identity is both challenging and beneficial in such environments as pervasive computing [Seigneur2005] or even simple e-mail [Seigneur2004].

The relationship between confidence and identity is complex and is discussed elsewhere in this book. In short, identity is an enabler for Alice's confidence, Alice can be confident in an identity and finally the shared identity can reaffirm trust between Alice and Bob.

Bob's identity is subjectively perceived by Alice by the sum of evidence that she has associated with him [Giddens1991] - his identity is built on her memories of him (the same way that she built her own identity by self-reflecting on her own memories). Potentially this may lead to an identity split: assuming that evidence about Bob is of a mixed kind (some good and some bad), depending on the way Alice interprets the evidence, she may either end up with a mixed opinion about one Bob or with firm opinions about two Bobs - one good and one bad.

Accessibility. Apart from Alice's willingness to evaluate her confidence, she needs input to this process - she needs evidence. Without evidence her confidence will be reduced to faith [Arion1994], the belief that can exist without evidence [Marsh1994]. Our willingness to gather evidence is usually taken for granted, as there is a strong belief in the basic anxiety [Giddens1991] that is driving us towards experimentation and learning. However, traumatic experiences or potentially high complexity of evidence-gathering may inhibit people from engaging in such activity.

The model does not cater for such situations, but it allows for their interpretation by the construct of certainty. The less access Alice has to evidence, the less evidence she can gather and the less certain she is about any outcome of her reasoning.

Date added: 2023-09-23; views: 600;