Preventive Traffic Control. Call Admission Control

Preventive control for ATM has two major components: call admission control and usage parameter control (8). Admission control determines whether to accept or reject a new call at the time of call set-up. This decision is based on the traffic characteristics of the new call and the current network load. Usage parameter control enforces the traffic parameters of the call after it has been accepted into the network. This enforcement is necessary to ensure that the call’s actual traffic flow conforms with that reported during call admission.

Before describing call admission and usage parameter control in more detail, it is important to first discuss the nature of multimedia traffic. Most ATM traffic belongs to one of two general classes of traffic: continuous traffic and bursty traffic. Sources of continuous traffic (e.g., constant bit rate video, voice without silence detection) are easily handled because their resource utilization is predictable and they can be deterministically multiplexed. However, bursty traffic (e.g., voice with silence detection, variable bit rate video) is characterized by its unpredictability, and this kind of traffic complicates preventive traffic control.

Burstiness is a parameter describing how densely or sparsely cell arrivals occur. There are a number of ways to express traffic burstiness, the most typical ofwhich are the ratio of peak bit rate to average bit rate and the average burst length. Several other measures of burstiness have also been proposed (8). It is well known that burstiness plays a critical role in determining network performance, and thus, it is critical for traffic control mechanisms to reduce the negative impact of bursty traffic.

Call Admission Control. Call admission control is the process by which the network decides whether to accept or reject a new call. When a new call requests access to the network, it provides a set of traffic descriptors (e.g., peak rate, average rate, average burst length) and a set of quality of service requirements (e.g., acceptable cell loss rate, acceptable cell delay variance, acceptable delay).

The network then determines, through signaling, if it has enough resources (e.g., bandwidth, buffer space) to support the new call’s requirements. If it does, the call is immediately accepted and allowed to transmit data into the network. Otherwise it is rejected. Call admission control prevents network congestion by limiting the number of active connections in the network to a level where the network resources are adequate to maintain quality of service guarantees.

One of the most common ways for an ATM network to make a call admission decision is to use the call’s traffic descriptors and quality of service requirements to predict the ‘‘equivalent bandwidth’’ required by the call. The equivalent bandwidth determines how many resources need to be reserved by the network to support the new call at its requested quality of service. For continuous, constant bit rate calls, determining the equivalent bandwidth is simple.

It is merely equal to the peak bit rate of the call. For bursty connections, however, the process of determining the equivalent bandwidth should take into account such factors as a call’s burstiness ratio (the ratio of peak bit rate to average bit rate), burst length, and burst interarrival time. The equivalent bandwidth for bursty connections must be chosen carefully to ameliorate congestion and cell loss while maximizing the number of connections that can be statistically multiplexed.

Usage Parameter Control. Call admission control is responsible for admitting or rejecting new calls. However, call admission by itself is ineffective if the call does not transmit data according to the traffic parameters it provided. Users may intentionally or accidentally exceed the traffic parameters declared during call admission, thereby overloading the network.

In order to prevent the network users from violating their traffic contracts and causing the network to enter a congested state, each call’s traffic flow is monitored and, if necessary, restricted. This is the purpose of usage parameter control. (Usage parameter control is also commonly referred to as policing, bandwidth enforcement, or flow enforcement.)

To monitor a call’s traffic efficiently, the usage parameter control function must be located as close as possible to the actual source of the traffic. An ideal usage parameter control mechanism should have the ability to detect parameter-violating cells, appear transparent to connections respecting their admission parameters, and rapidly respond to parameter violations. It should also be simple, fast, and cost effective to implement in hardware. To meet these requirements, several mechanisms have been proposed and implemented (8).

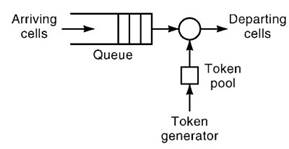

The leaky bucket mechanism (originally proposed in Ref. 10) is a typical usage parameter control mechanism used for ATM networks. It can simultaneously enforce the average bandwidth and the burst factor of a traffic source. One possible implementation of the leaky bucket mechanism is to control the traffic flow by means of tokens. A conceptual model for the leaky bucket mechanism is illustrated in Fig. 5.

In Fig. 8, an arriving cell first enters a queue. If the queue is full, cells are simply discarded. To enter the network, a cell must first obtain a token from the token pool; if there is no token, a cell must wait in the queue until a new token is generated. Tokens are generated at a fixed rate corresponding to the average bit rate declared during call admission. If the number of tokens in the token pool exceeds some predefined threshold value, token generation stops.

Figure 8. Leaky bucket mechanism

This threshold value corresponds to the burstiness of the transmission declared at call admission time; for larger threshold values, a greater degree of burstiness is allowed. This method enforces the average input rate while allowing for a certain degree of burstiness.

One disadvantage of the leaky bucket mechanism is that the bandwidth enforcement introduced by the token pool is in effect even when the network load is light and there is no need for enforcement. Another disadvantage of the leaky bucket mechanism is that it may mistake nonviolating cells for violating cells. When traffic is bursty, a large number of cells may be generated in a short period of time, while conforming to the traffic parameters claimed at the time of call admission. In such situations, none of these cells should be considered violating cells.

Yet in actual practice, leaky bucket may erroneously identify such cells as violations of admission parameters. A virtual leaky bucket mechanism (also referred to as a marking method) alleviates these disadvantages (11). In this mechanism, violating cells, rather than being discarded or buffered, are permitted to enter the network at a lower priority (CLP = 1).

These violating cells are discarded only when they arrive at a congested node. If there are no congested nodes along the routes to their destinations, the violating cells are transmitted without being discarded. The virtual leaky bucket mechanism can easily be implemented using the leaky bucket method described earlier. When the queue length exceeds a threshold, cells are marked as ‘‘droppable’’ instead of being discarded. The virtual leaky bucket method not only allows the user to take advantage of a light network load but also allows a larger margin of error in determining the token pool parameters.

Reactive Traffic Control. Preventive control is appropriate for most types of ATM traffic. However, there are cases where reactive control is beneficial. For instance, reactive control is useful for service classes like ABR, which allow sources to use bandwidth not being used by calls in other service classes. Such a service would be impossible with preventive control because the amount of unused bandwidth in the network changes dynamically, and the sources can only be made aware of the amount through reactive feedback.

There are two major classes of reactive traffic control mechanisms: rate-based and credit-based (12,13). Most rate-based traffic control mechanisms establish a closed feedback loop in which the source periodically transmits special control cells, called resource management cells, to the destination (or destinations). The destination closes the feedback loop by returning the resource management cells to the source.

As the feedback cells traverse the network, the intermediate switches examine their current congestion state and mark the feedback cells accordingly. When the source receives a returning feedback cell, it adjusts its rate, either by decreasing it in the case of network congestion or increasing it in the case of network underuse. An example of a rate-based ABR algorithm is the Enhanced Proportional Rate Control Algorithm (EPRCA), which was proposed, developed, and tested through the course of ATM Forum activities (12).

Credit-based mechanisms use link-by-link traffic control to eliminate loss and optimize use. Intermediate switches exchange resource management cells that contain ‘‘credits,’’ which reflect the amount of buffer space available at the next downstream switch. A source cannot transmit a new data cell unless it has received at least one credit from its downstream neighbor. An example of a credit-based mechanism is the Quantum Flow Control (QFC) algorithm, developed by a consortium of reseachers and ATM equipment manufacturers (13).

Date added: 2024-02-20; views: 586;